Database partitioning in simplest terms refers to dividing a big table into smaller tables for ease of maintenance, query optimization, etc.

Advantages:-

Faster querying - Queries can be parallelly processed for range and aggregate queries and match queries can be worked up with smaller subsets instead of searching in bigger tables thus reducing query time.

Data Archival - By dividing data into smaller tables, less frequently used or unused partitions can be moved to cheaper storage thus making more room for recent data and optimizing on the costs.

Maintenance - We can easily run bulk update and drop partition queries without obtaining a global lock on the entire table blocking our read-write operations.

Disadvantages:-

Data updation across partitions - Data updation becomes tricky especially when updation leads to the movement of table rows from one partition to another. Let’s again take the example of our score table. If update marks for id = 411 from 51 to 98, the said row will need to be transferred from partition score_4060 to score_80100.

Searching via indexes - If the index key is other than the partition key, maintaining those indexes also becomes a challenge. This becomes more difficult when partitions are separated across nodes (sharding). We can though manage this by maintaining individual indexes at the partition level but if we’ve to search using the index, it will have to search all the indexes and then collate the data before returning to the application or the application will have to maintain this collation for some cases (e.g. inheritance partitioning in postgres). Another solution is to maintain the indexes at partitioned table level and each partition table can keep a copy of the same index.

Try it out ✨

Let’s try to create a partition table on Postgres database. Please note that the below experiments are being done on Postgres database and the results may vary for other databases.

For this experiment, we can use locally installed Postgres or a docker container. The goal here is to get a postgres server running. We’ll create a table named score having columns id and marks, and partition it by marks.

CREATE TABLE score (

id SERIAL NOT NULL,

marks INTEGER NOT NULL

) partition by range(marks);

and create some partitions

create table score_0020 partition of score for values from (0) to (20);

create table score_2040 partition of score for values from (20) to (40);

create table score_4060 partition of score for values from (40) to (60);

create table score_6080 partition of score for values from (60) to (80);

create table score_80100 partition of score for values from (80) to (100);

If we check our table is created now

Note that the partitioned_table score is just a virtual table with no storage to it. Storage actually belongs to the partition tables score_0020, score_2040 and so on.

Let’s seed our database table with some random data

INSERT INTO score(marks)

SELECT floor(random()*100)

FROM generate_series(0,10000000);

And we’re done!!

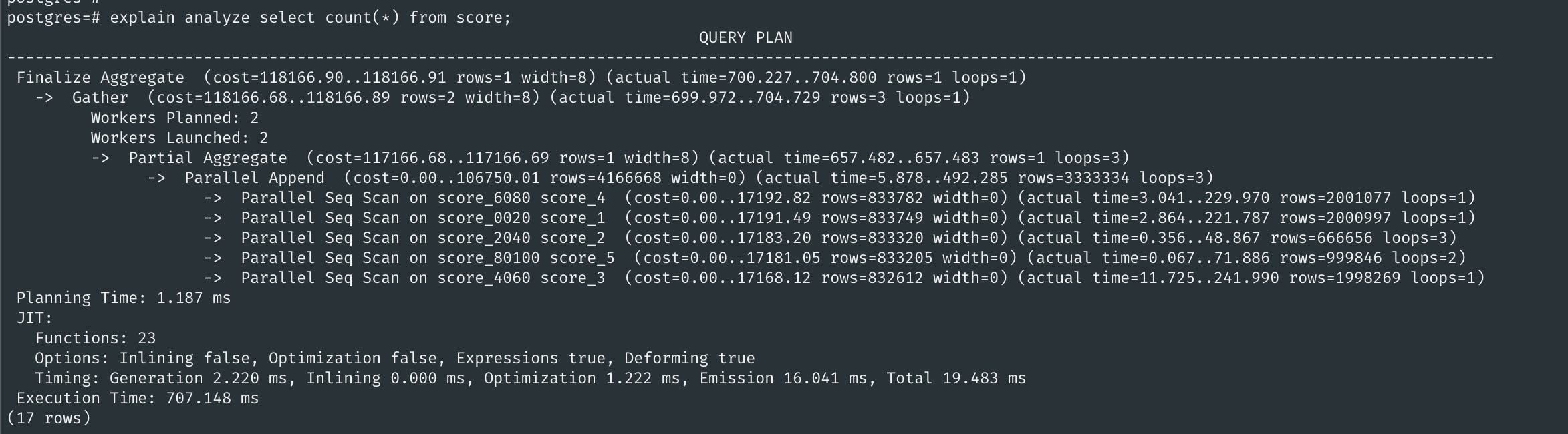

Let’s try out some queries

Let’s also try creating an index on column marks

CREATE INDEX marks_idx ON score(marks);

and check for the index status

Indexes automatically got created on partition tables as well. And the best part is our application does not need to worry about which partition to query from. PostgreSQL automatically propagates the index to each partition.

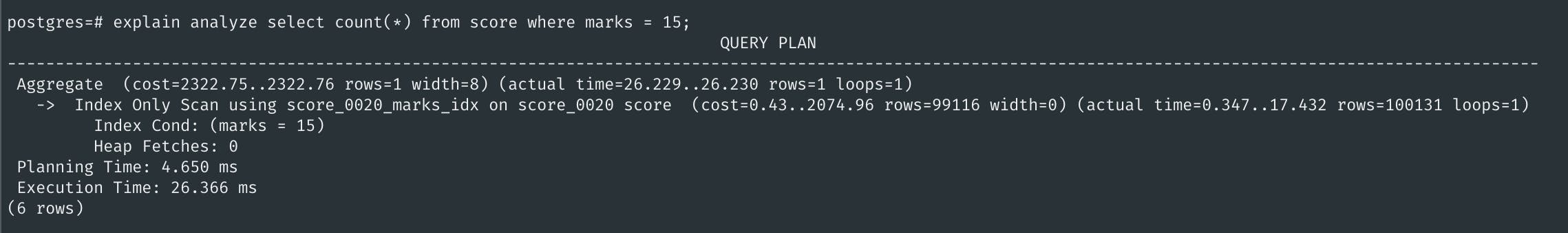

If we search for any marks, it went for searching only in the partition score_0020 and also utilized the index.

Let’s also create an unpartitioned table for benchmarking

create table score_unpartitioned(id serial not null, marks integer not null);

insert into score_unpartitioned(marks) select (random()*100) from generate_series(0,10000000);

create index score_unpartitioned_idx on score_unpartitioned(marks);

As we can clearly see, query is much faster with the partitioned table in comparison to an unpartitioned table as the query has to search just on the partitioned table.

An important point to note however is indexes, partitions and optimisation of queries depend on the size of data that we have and the acceptable response time for the queries that we have and of course, there is the cost factor associated with creating multiple indexes and partitioned tables. Number of partitions and strategy for partition also depends on the size of data and the type of queries that you have to run. YMMV!